The Trump administration’s increasing use of artificial intelligence-generated imagery in public communications has drawn concern from experts who say it may contribute to public distrust in official information.

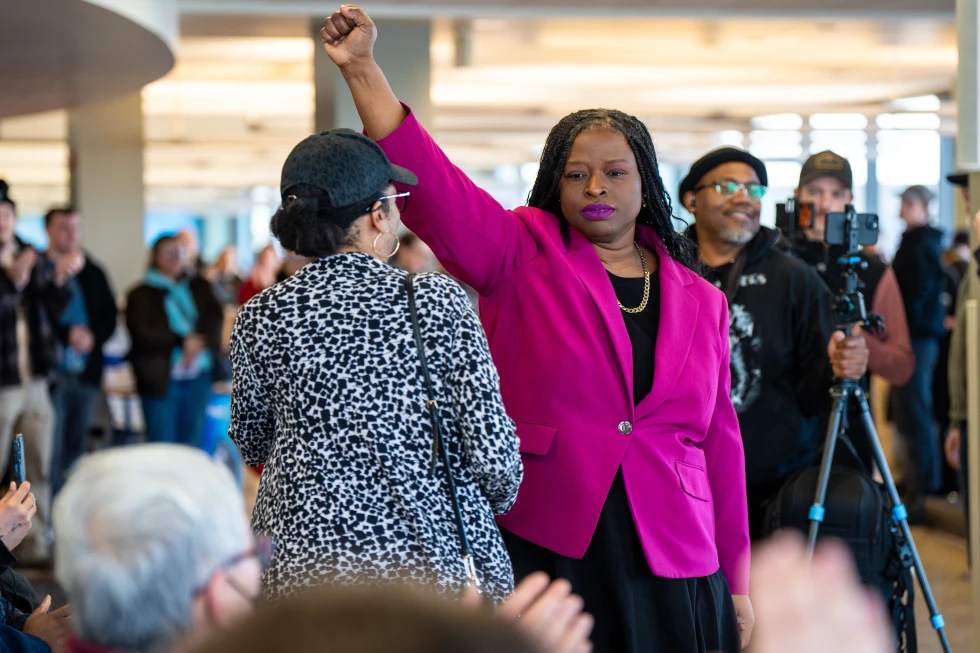

Officials at the White House and affiliated social media accounts have shared a range of AI-created visuals, including stylized cartoons and memes. However, some recent content has prompted debate about where satire ends and misleading representation begins. A prominently circulated image depicted civil rights attorney Nekima Levy Armstrong appearing to cry during her arrest at an immigration protest — a version that differed markedly from original footage of the event.

The original photograph was initially posted by the Homeland Security secretary’s account with a neutral expression, while a later post on an official White House account showed an altered version. In response, Levy Armstrong released a longer video recorded by her husband that presented a different account of her arrest, showing her being handcuffed and calmly speaking with agents. She has criticized the altered image, saying it misrepresented her actions and was part of a broader effort to shape public perception around immigration protests.

Misinformation researchers say the spread of AI-generated or edited content from authoritative sources could blur the distinction between factual reporting and manipulated material. They argue that such content may amplify confusion among audiences, particularly when shared widely on social media platforms. Some specialists have urged the development of technological and regulatory measures, such as digital watermarking, to help identify AI-generated media, though comprehensive solutions have yet to be implemented.

Debate over the use of AI in official communication has occurred against a backdrop of broader discussion about the technology’s impact on public information ecosystems, including concerns about deepfake videos and the role of generative tools in political messaging.